Research

My research focusses on continual learning for robotics. My interests also include reinforcement learning for robots and learning from demonstrations. Here are a few of the projects that I am working on or have worked on in the past. Please refer to the publications page for bibliographic details and to the code page for open source code repositories.

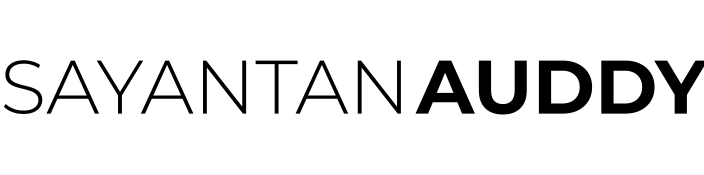

Scalable and Efficient Continual Learning from Demonstration via Hypernetwork-generated Stable Dynamics Model

We propose an approach for stable continual-Learning from Demonstration (LfD) that addresses the need for both accuracy and stability in robot training via LfD. Our method introduces stability through a hypernetwork model that generates two networks: a trajectory learning model and a trajectory stabilizing Lyapunov function. This ensures stable learning of multiple motion skills, but more importantly, also greatly enhances the continual learning performance, especially in the size-efficient chunked hypernetworks. Our model allows continual training for predicting robot end-effector trajectories across diverse real-world tasks without retraining on past demonstrations. Additionally, we introduce stochastic regularization with a single task embedding in hypernetworks that significantly reduces the cumulative training time cost without performance loss. Empirical evaluations on LASA, high-dimensional LASA extensions, and a new RoboTasks9 dataset demonstrate superior performance in trajectory, stability, and continual learning metrics.

Continual Learning from Demonstration of Robotics Skills

We present the first approach for continual learning from demonstration, in which we use hypernetworks to generate neural ordinary differential equation solvers. Our method enables robots to learn new movement skills without forgetting past tasks, and without needing to retrain on past demonstrations. Empirical results, evaluated on LASA and two new datasets (HelloWorld and RoboTasks), demonstrate superior performance in both trajectory and continual learning metrics compared to approaches from all other families of continual learning methods.

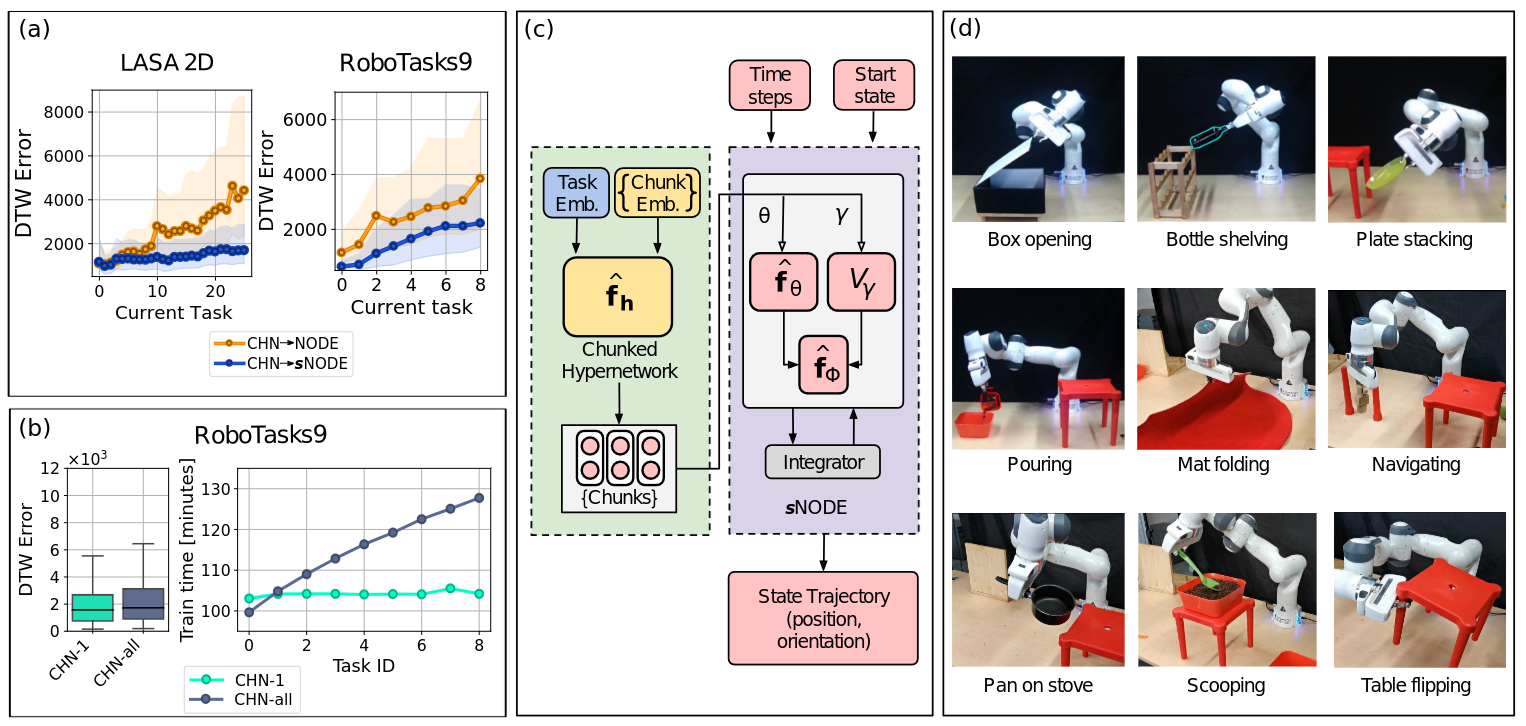

Sim2Real Transfer with Continual Domain Randomization

Randomization is a commonly used method for Sim2Real transfer in robotics, and often multiple robot parameters are randomized together in an attempt to create a simulation model that is robust to the stochastic nature of the real world. However, at the onset of training in simulation, it may not be clear which parameters should be randomized. Moreover, excessive randomization can prevent the robot model from learning in simulation. In this work, we show that we can start from a model trained in ideal simulation, and then randomize one parameter at a time with continual learning to remember the effects of previous randomizations. At the end of all the randomizations, we get a model that learns effectively in simulation and also performs more robustly in the real world compared to the other baselines.

Direct Imitation Learning-based Visual Servoing using the Large Projection Formulation

This work introduces dynamical system-based imitation learning for direct visual servoing, using deep learning-based perception to extract robust features and an imitation learning strategy for sophisticated robot motions. Integrated with the large projection task priority formulation, the proposed method achieves complex tasks with a robotic manipulator, as demonstrated through extensive experiments.

Hierarchical Control for Bipedal Locomotion using Central Pattern Generators and Neural Networks

We introduce a novel hierarchical control approach for bipedal locomotion, addressing challenges in synchronizing joint movements and achieving high-level objectives. Our method utilizes an optimized central pattern generator (CPG) network for joint control, while a neural network serves as a high-level controller to modulate the CPG network. By separating motion generation from modulation, the high-level controller can achieve goals using a low-dimensional control signal, avoiding direct control of individual joints. The efficacy of our hierarchical controller is demonstrated in simulation experiments with the Neuro-Inspired Companion (NICO) robot, showcasing adaptability even without an accurate robot model.